How to manage Conduit piping

Contents

- 1 Introduction

- 2 Pipe Parameter

- 3 Macros

- 4 Example Cases

- 4.1 How to create a Macro and a Operation

- 4.2 How to use CommandName and AddComment

- 4.3 How to use Source: data

- 4.4 How to use Source: scanned_unit

- 4.5 How to use Source: input_parameter

- 4.6 How to use Source: data_ptr

- 4.7 How to use ‘Piggy-Back’ Input Parameter

- 4.8 How to use Replace Tree Component

- 4.9 How to render, store and record media

Introduction

The purpose of this document is to teach the user how to use the pipe parameter operation in a Conduit request as well as how to set up the command’s parameters.

Conduit can be thought of as an executor of distinct Operations. Each operation is a command or a macro encapsulating some behavior into a named entity. Many operations are designed to directly manipulate the device history record (DHR) of a serial number, but ultimately an operation can exhibit nearly any behavior deemed useful and/or relevant to production manufacturing. Each operation has a number of prompts or parameters required in order to execute successfully, that number ranging from zero (no parameters at all) to a dozen or more.

Conduit’s primary design methodology has been to create a large number of relatively simple, well-defined operations specific to a single action. By composing multiple operations into a single request, clients can perform complicated tasks using these relatively simple building blocks.

By default, Conduit supports blocks or sets of operations in a single request. Performing lots of “work” is as simple as sending as many operations as required to adjust (append to) the unit’s history accordingly. However, in some cases, users may want to allow some level of interoperability between the discrete operations. More concretely, base the inputs of operation N using information obtained by executing operation N - 1. Since each operation is its own miniature “program” or function users need a way to facilitate feeding or sharing data from one operation into another operation.

The previously mentioned is achieved by using Pipe operations, more specifically PipeParameter.

Before outlining the actual usage of PipeParameter, there is one thing to keep in mind: PipeParameter is really just a way to avoid making multiple, small requests to Conduit. Anything you can do with Piping could be mimicked in a client application by asking Conduit to execute a single operation, take the results of that operation and then construct a follow-up call with the extracted fields. This is obviously cumbersome and likely very inefficient, but that is all in terms of the PipeParameter operation. We’re linking or gluing a composed set of operations together so we can reduce round trips to Conduit and the need for implementing complicated client logic.

Pipe Parameter

PipeParameter provides a mechanism to bypass the typical prompting requirements of a Conduit operation. Meaning that it enables clients to feed/push/pipe data from some source field into a destination field on a subsequent, non-pipe operation. Pipe Parameter only interacts with NON-PIPE operations. This means sequential PipeParameter calls act as if the adjacent PipeParameter calls aren’t present. The from_field (data) source for a PipeParameter call is the last NON-PIPE operation executed before the PipeParameter call and the destination to_field is on the first NON-PIPE operation following the PipeParameter call. PipeParameter itself has 3 parameters driving its execution: Source, From Field and To Field.

Figure 1: Three Parameters Of PipeParameter

Source

The source field indicates the PipeParameter operation where to look for the piece of information identified by the from_field parameter. At this time the possible values for source are: Data (data), Data Pointer (data_ptr), Input Parameter (input_parameter) and Scanned Unit (scanned_unit).

- Data (data)

The default source when not provided. Using source data means the Pipe Parameter call will seek out the specified from_field in the execution results of the last NON-PIPE operation executed before this call. Note that not all operations produce meaningful data, and there is not currently a published schema of the data every operation produces (if any is produced at all).

- Data Pointer (data_ptr)

It is identical to data but the from_field is specified using the JSON Pointer syntax.

- Input Parameter (input_parameter)

Take the from_field input parameter of the previously executed NON-PIPE operation and push that value into the to_field of the closest NON-PIPE operation.

- Scanned Unit (scanned_unit)

The from_field, specified using JSON pointer syntax, is taken from the snapshot of unit information Conduit gathers while scanning the transaction unit supplied during the Conduit request. At the moment we have not published a formal list of fields available in the scanned unit snapshot but quite a bit of data is gathered during the process of scanning the transaction unit.

From Field

Within the specified source, identify the value associated with the from_field name.

To Field

Populate the to_field name with the value extracted from source-> from_field as if the client had explicitly populated that value when constructing the call in the request.

Macros

The macro mechanism in Conduit and the command register allow administrators to encapsulate custom behaviors into a single named entity. This includes removing optional fields not relevant for that use case and overriding prompts to better fit the specific manufacturing domain. Many “built-in” macros take advantage of piping, including:

StartDefectReprocessing

RESCAN

Example Cases

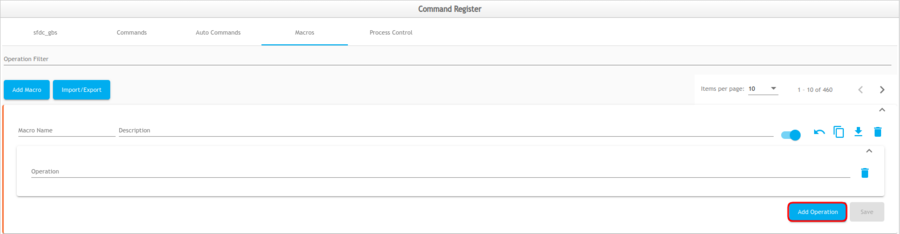

How to create a Macro and a Operation

To create a new Macro and new operations follow the steps below:

- Select the Macro button. A new window will appear under the Macro button.

- Enter the Macro Name.

- Enter the Description.

- Enter the Operation.

- If users need to add more operations they have to select the Add Operation button.

Figure 3: Operation Button

- Enter the values from the command name.

- Select the Save button to save the Macro.

How to use CommandName and AddComment

The easiest way to understand using Pipe Parameter is by example. We’ll start with a few simple cases, and then move on to more exotic and/or tedious applications.

A macro consists of one or more Operations, and each operation consists of a CommandName which has one or more fields that will be filled with their corresponding value.

In order to describe an instance of a Conduit Operation we will use the following syntax:

Command: CommanName

Values: <field_name1: value> <field_name2: value2> <field_nameN: valueN>

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Add Attribute in the Operation field.

- Enter the Attribute Name value.

- Enter the Attribute Value value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Comment in the Operation field.

- Enter the Comment Text value.

Figure 4: CommandName and AddComment Example

The structure below represents the macro from the image above.

Command: CommandName

Values: <attr_name: Command name> <attr:value: add attribute command>

Operation: PipeParameter

Values: <source: input_parameter> <from_field: attribute value> <to_field: comment_text>

Operation: AddComment

Values: <comment_text: This is some text>

Note: Conduit command is actually a JSON object with at least a field called name and any other parameters provided, so the actual AddComment command above could be modeled as:

{“name”: “AddComment”, “comment_text”: “This is some text”}

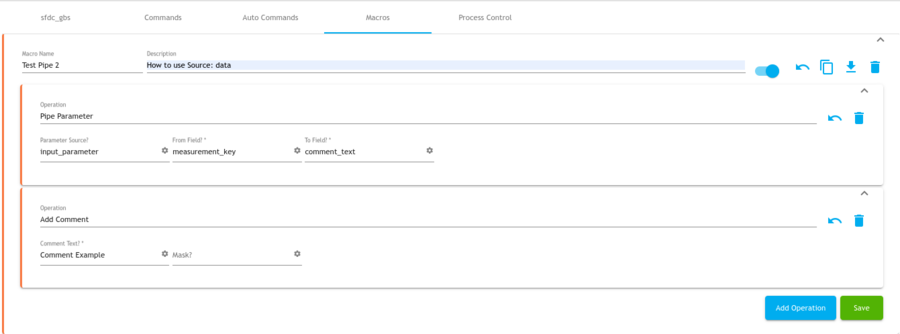

How to use Source: data

The simplest possible pipe parameter scenario. AddMeasurementKey produces a data field called measurement_key which we Pipe to the comment_text field on the AddComment operation.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Comment in the Operation field.

- Enter the Comment Text value.

Figure 5: Source data Example

The structure below represents the macro from the image above.

Command: PipeParameter

Values: <from_field: measurement_key> <to_field: comment_text>

Command: AddComment

Value: <comment_text: This is some text>

How to use Source: scanned_unit

The ScannedUnit produced during the scanning process has a large dataset, including an active list of unremoved attributes. Here we make use of JSON pointer syntax to access the attr_data field of the first element of the attributes array under the unit_elements root element.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Scan Unit in the Operation field.

- Enter the Unit Serial Number value.

- Enter the Part Number value.

- Enter the Quantity value.

- Enter the Revision value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Comment in the Operation field.

- Enter the Comment Text value.

Figure 6: Source scanned_unit Example

The structure below represents the macro from the image above.

Command: PipeParameter

Values: <from_field: /unit_elements/attributes/0/attr_data> <source: scanned_unit> <to_field: comment_text>

Command: AddComment

Values: <comment_text: Comment example>

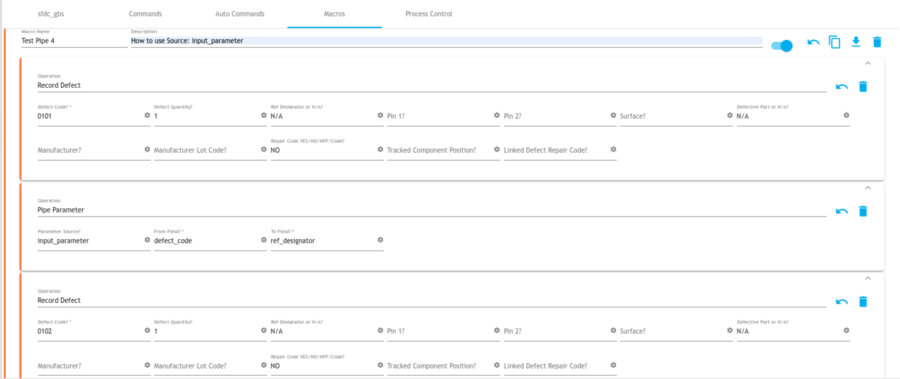

How to use Source: input_parameter

Sometimes we want to pass the same value provided to one operation to a later operation in the transaction instead of prompting for a piece of data twice. Here we piped the defect_code input parameter from the first RecordDefect call to the ref_designator field of the 2nd RecordDefect call.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Record Defect in the Operation field.

- Enter the Defect Code value.

- Enter the Defect Quantity value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Record Defect in the Operation field.

- Enter the Defect Code value.

- Enter the Defect Quantity value.

Figure 7: Input Parameter Example

The structure below represents the macro from the image above.

Command: RecordDefect

Values: <defect_code: 0101> <ref_designator: N/A>

Command: PipeParameter

Values: <from_field: defect_code> <source: input_parameter> <to_field: ref_designator>

Command: RecordDefect

Values: <defect_code: 0102>

How to use Source: data_ptr

The JSON pointer version of the previous NON-PIPE operation results. The plain data source version tries very hard to identify the from_field, including iterating through various elements. The data_ptr version allows you to explicitly identify the from_field if that is necessary. As you can see, the plain data source version is more approachable in most cases as the data_ptr usage requires explicit knowledge of the data results produced by the operation.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Scan Unit in the Operation field.

- Enter the Add Measurement Key value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Comment in the Operation field.

- Enter the Comment Text value.

Figure 8: Source data_ptr Example

The structure below represents the macro from the image above.

Command: AddMeasurementKey

Command: PipeParameter

Values: <from_field: /0/data/measurement_key> <source: data_ptr> <to_field: comment_text>

Command: AddComment

Values: <comment_text: This is some text>

How to use ‘Piggy-Back’ Input Parameter

Sometimes we may want to propagate the results of an operation or operations executed earlier in a set of commands to operations much later in the transaction. As soon as we need to bridge across multiple NON-PIPE operations we need to get creative. Each operation has a known list of field names relevant to its execution as defined in the client-command-registry. This means that any fields on that operation outside this list are effectively ignored.

That means, we can pipe fields unrelated to an operation to a field name that operation doesn’t care about, then after executing that intermediate operation we can pipe that unrelated field further down the list of calls. This allows us to percolate meaningful data from an earlier call all the way to the last operation in a transaction.

Note: Piggyback is an example of a ‘to_field’ that won’t collide with any field name the intermediate operation(s) might consume during execution.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Read Flex Field in the Operation field.

- Enter the Reference Table value.

- Enter the Reference Field value.

- Enter the Reference Value value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Read Flex Field in the Operation field.

- Enter the Reference Table value.

- Enter the Reference Field value.

- Enter the Reference Value value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Attribute in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Add Attribute in the Operation field.

- Enter the Attribute Name value.

- Enter the Attribute Value value.

Figure 9: ‘Piggy-Back’ Input Parameter Example

The structure below represents the macro from the image above.

Command: ReadFlexField

Values: <flex_field: board_label_name> <reference_field: part_number> <reference_table: part> <reference_value: ~CDIAGPART001~>

Command: PipeParameter

Values: <from_field: flex_value> <to_field: piggyback>

Command: ReadFlexField

Values: <flex_field: board_algorithm> <reference_field: part_number> <reference_table: part> <reference_value: ~CDIAGPART001~>

Command: PipeParameter

Values: <from_field: piggyback> <source: input_parameter> <to_field: attr_name>

Command: PipeParameter

Values: <from_field: flex_value> <to_field: attr_data>

Command: AddAttribute

Values: <attribute_name: attr_name> <attribute_value: attr_data>

How to use Replace Tree Component

Find a tree component attached somewhere in the scanned unit’s component hierarchy and replace that component.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Find Unit Tree Component in the Operation field.

- Enter the Unit Serial Number value.

- Enter the Component id value.

- Enter the Component Part Number value.

- Enter the Reference Designator value.

- Enter the Not Found Error Message value.

- Create a new operation.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the Replace Unit Component value.

- Enter the Unit Serial Number value.

- Enter the Old Component id value.

- Enter the Component id value.

Figure 10: Replace Tree Component Example

The structure below represents the macro from the image above.

Command: PipeParameter

Values: <from_field: /serial_number> <source: scanned_unit> <to_field: unit_serial_number>

Command: FindUnitTreeComponent

Values: <not_found_error_message: Unable to identify component to remove>

Command: PipeParameter

Values: <from_field: parent_serial_number> <source: data> <to_field: unit_serial_number>

Command: PipeParameter

Values: <from_field: component_id> <source: input_parameter> <to_field: old_component_id>

Command: ReplaceUnitComponent

Values: <unit_serial_number: unit_serial_number> <old_component_id: old_component_id> <component_id: component_id>

How to render, store and record media

It is possible to render and store labels and record that information in a media file which will have an alias and a description.

To do so follow the steps below:

- Create a new Macro.

- Enter the Macro Name.

- Enter the Description.

- Enter the command Render Label in the Operation field.

- Enter the Unit Serial Number value.

- Enter the Label Name value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Store Media in the Operation field.

- Enter the Original Filename value.

- Enter the Media Content value.

- Enter the Media Payload File value.

- Enter the Mime Type value.

- Enter the Media Tag value.

- Enter the Storage Level value.

- Enter the Media Description value.

- Enter the Media Alias value.

- Create a new operation.

- Enter the command Pipe Parameter in the Operation field.

- Enter the Parameter Source value.

- Enter the From Field value.

- Enter the To Field value.

- Create a new operation.

- Enter the command Record Media in the Operation field.

- Enter the Media Identifier(s) value.

Figure 11: Render, Store And Record Media Example

The structure below represents the macro from the image above.

Command: RenderLabel

Values: <label_name: le_24x_test_dup_allowed> <test_print: 0>

Command: PipeParameter

Values: <from_field: rendered_file> <source: data> <to_field: payload_file>

Command: PipeParameter

Values: <from_field: content_type> <source: data> <to_field: mime_type>

Command: PipeParameter

Values: <from_field: original_filename> <source: data> <to_field: original_filename>

Command: StoreMedia

Values: <media_alias: Weir Hardness Certificate Testing> <media_description: Rendered Label> <media_tag: CPI> <storage_level: site>

Command: PipeParameter

Values: <from_field: identifier> <source: data> <to_field: media_identifier>

Command: RecordMedia

Values: <media_identifiers: media_identifier>